I am currently working on a smart guide digital human prototype with my team. My role focuses on fully creating the digital human and its environment, while my teammates are integrating an LLM-powered AI so users can interact with this 3D virtual character in real time. The character will run on hardware systems we are also building, designed for use in high-traffic public spaces.

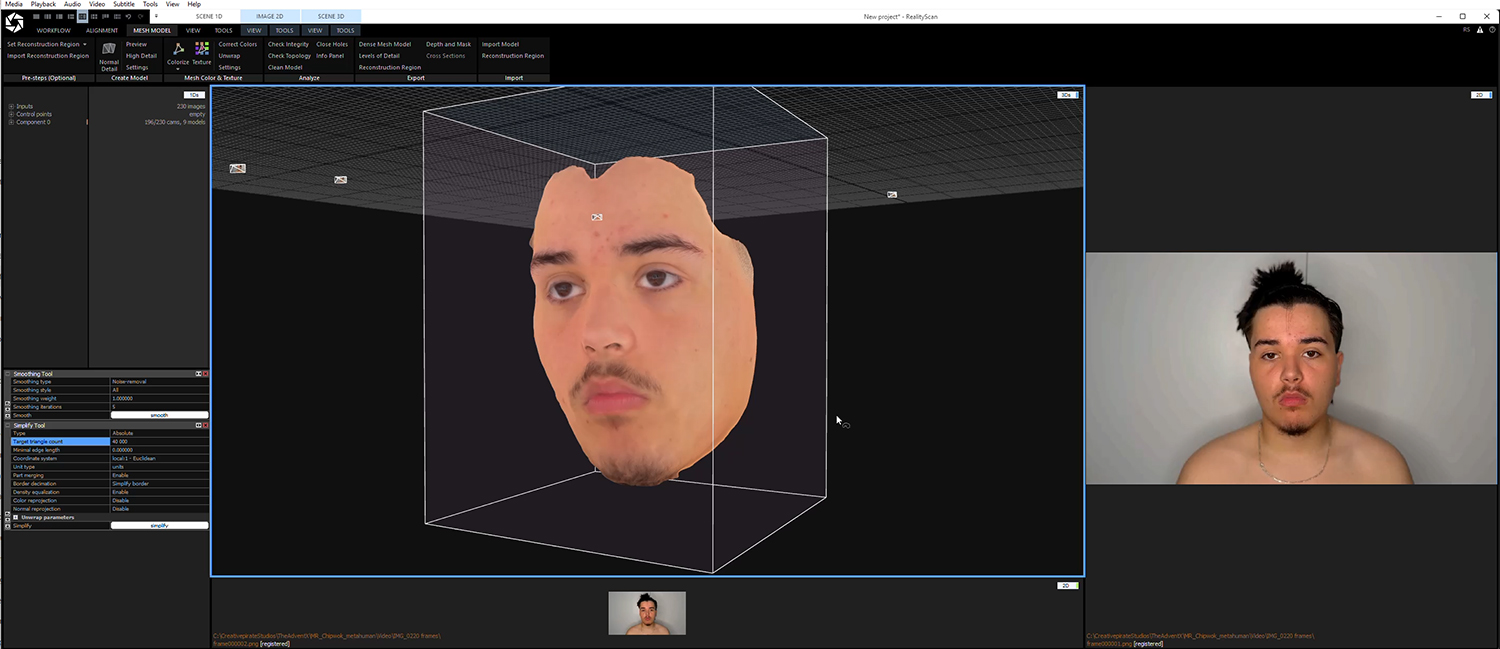

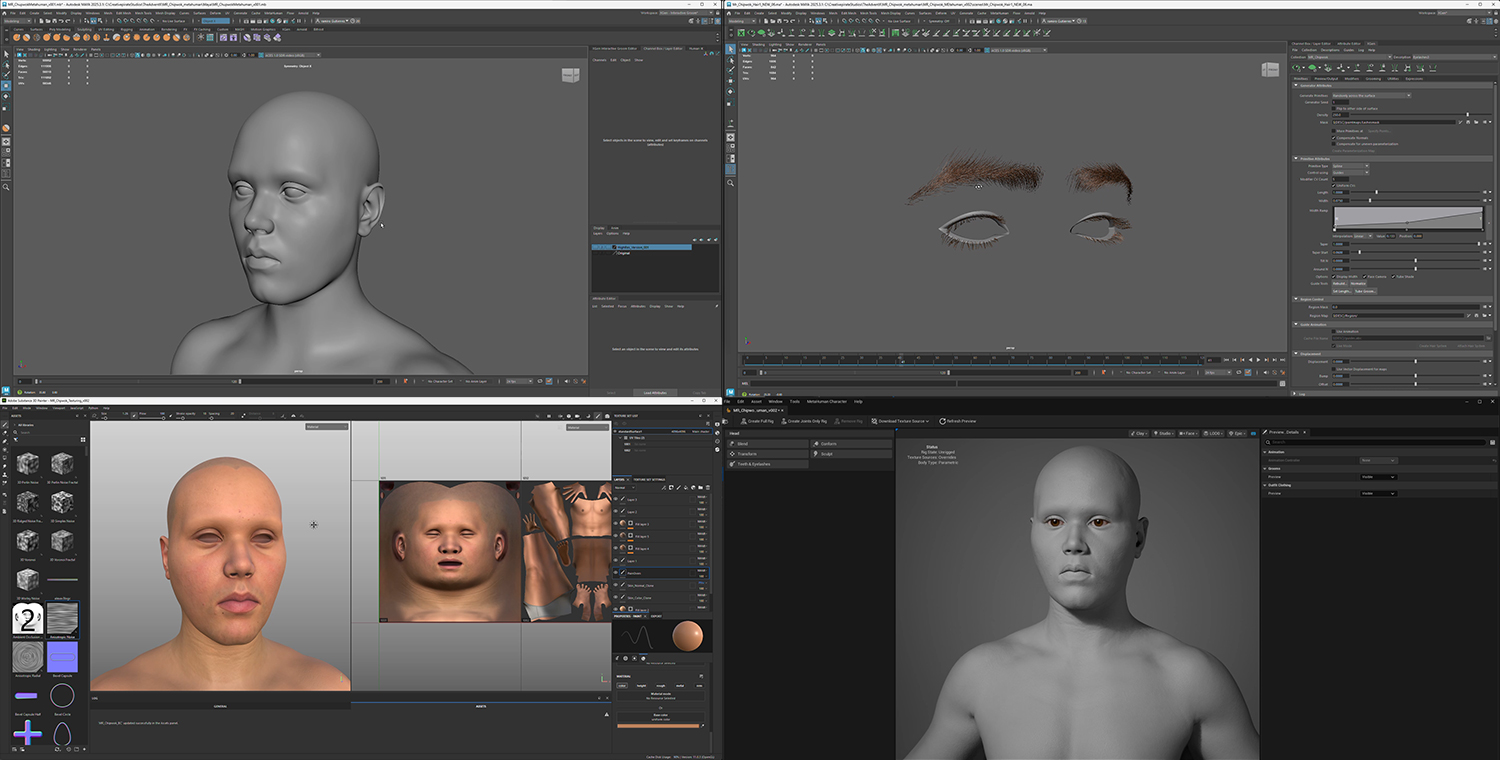

I’m developing the MetaHuman primarily using the Mesh-to-MetaHuman workflow in Unreal Engine 5.6. The process started by scanning my son, Bruno, then retopologizing the scan in ZBrush, followed by final refinements in Maya before bringing the mesh back into MetaHuman. From there, I used Live Link for real-time performance capture.

This project is still a work in progress, but here is where things stand so far.

In this version, I added some of the holographic motion graphics and HUD interface elements. Environment creation, camera blocking, and lighting

Environment creation, camera blocking, and lighting

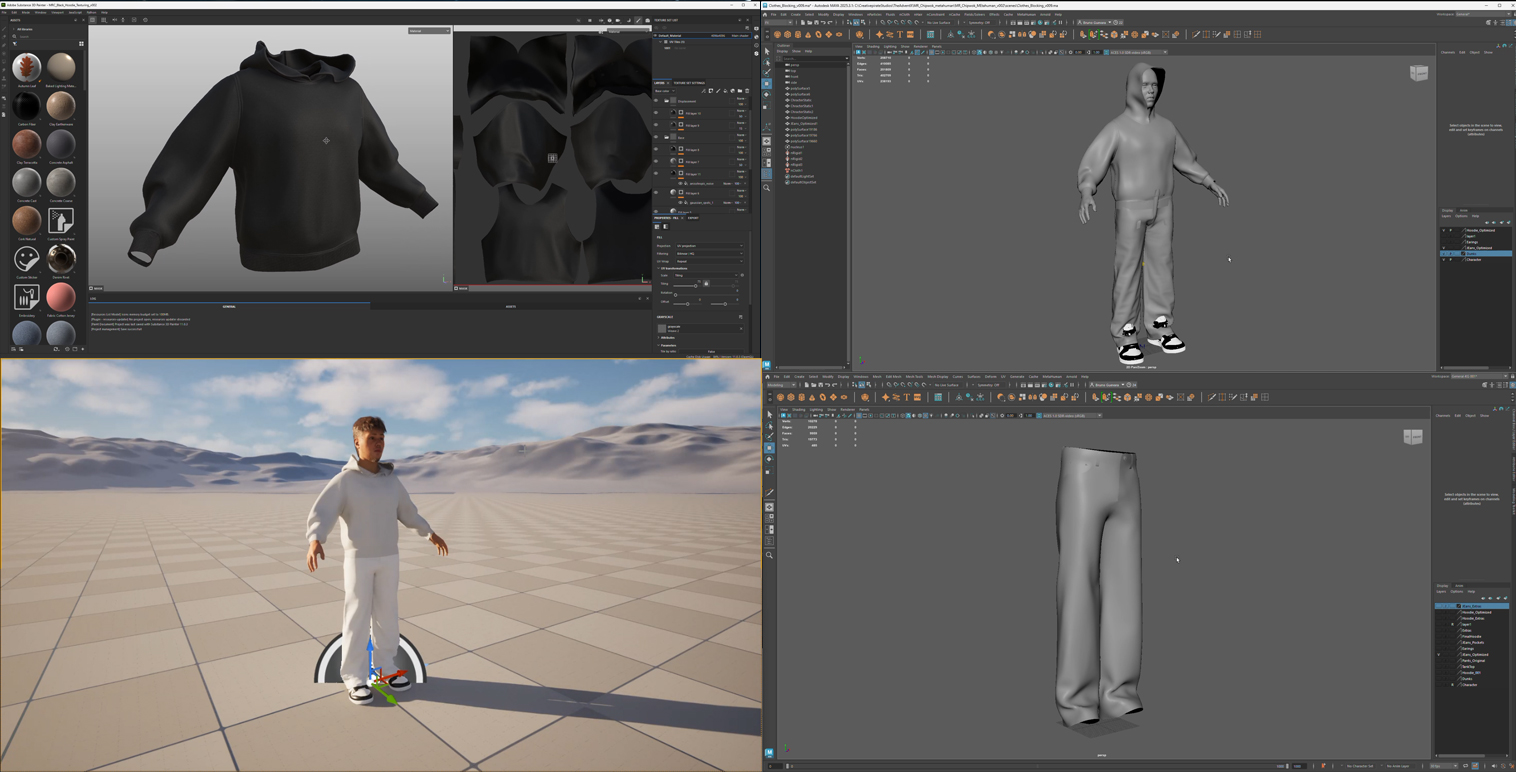

Live link performance  Creation of garments and accessories

Creation of garments and accessories

Retopologizing, creating the groom (hair), and texture painting

I took a series of photos of Bruno and used RealityScan to generate the 3D model through photogrammetry.